GenML 2024

Thank you for participating in our event!

Event closed

Scroll to see how amazing it was

10

December 2024

08:30-17:00

Tel-Aviv

ZOA House TLV

Daniel Frisch St 1

We want to thank our speakers for sharing their expertise and our sponsors who made GenML possible. It was truly exciting to see AI researchers, data scientists, engineers, and enthusiasts coming together for the love of generative AI.

We're looking forward to seeing you at our upcoming ML community events, where we'll continue exploring the frontiers of machine learning together.

GenAI in the Wild: Protecting Your Herd from Predators by Ido Farhi, Senior Data Scientist at Intuit

30 min

Making AI development easy with Prompt Flow by Lior King, Sr. Cloud Solutions Architect at Microsoft

30 min

Unlocking the potential of MLOps & LLMOps: current and future business applications by, Yuval Shmuel

20 min

Exploring Function Calling with Gemini by Sveta Morag, Cloud Solutions Architect at Google Cloud

20 min

Profiling Buyer Interests Using LLM-Generated Graphs by, Oded Zinman, Applied Researcher at eBay

20 min

Near-Realtime RAG on Production Data: Bringing AI to the Data, Boris Dahav, Data Analytics, Oracle

20 min

Building the Future: AI Infrastructure in the GenAI Era, by Ronen Dar, Co-Founder and CTO at Run:ai

15 min

Is My LLM Doing a Good Job? Evaluating LLMs at Scale: Lessons Learned from Lightricks, Asi Messica

20 min

Easily build Gen AI application with RAG, Agents and Evaluation, using Amazon Bedrock

GenAI in the Wild: Protecting Your Herd from Predators

Making AI development easy with Prompt Flow

Near-Realtime RAG on Production Data: Bringing AI to the Data

Building the Future: AI Infrastructure in the GenAI Era

Is My LLM Doing a Good Job? Evaluating LLMs at Scale: Lessons Learned from Lightricks

AI-Driven Meeting Prep summaries: Helping Client preparation

NumeroLogic: Number Encoding for Enhanced LLMs' Numerical Reasoning

How to build GenAI agents that actually work

Stay tuned for upcoming events

Speakers

Agenda

8:30-9:30

Gathering & mingling with Refreshments

09:30-09:40

Opening remarks

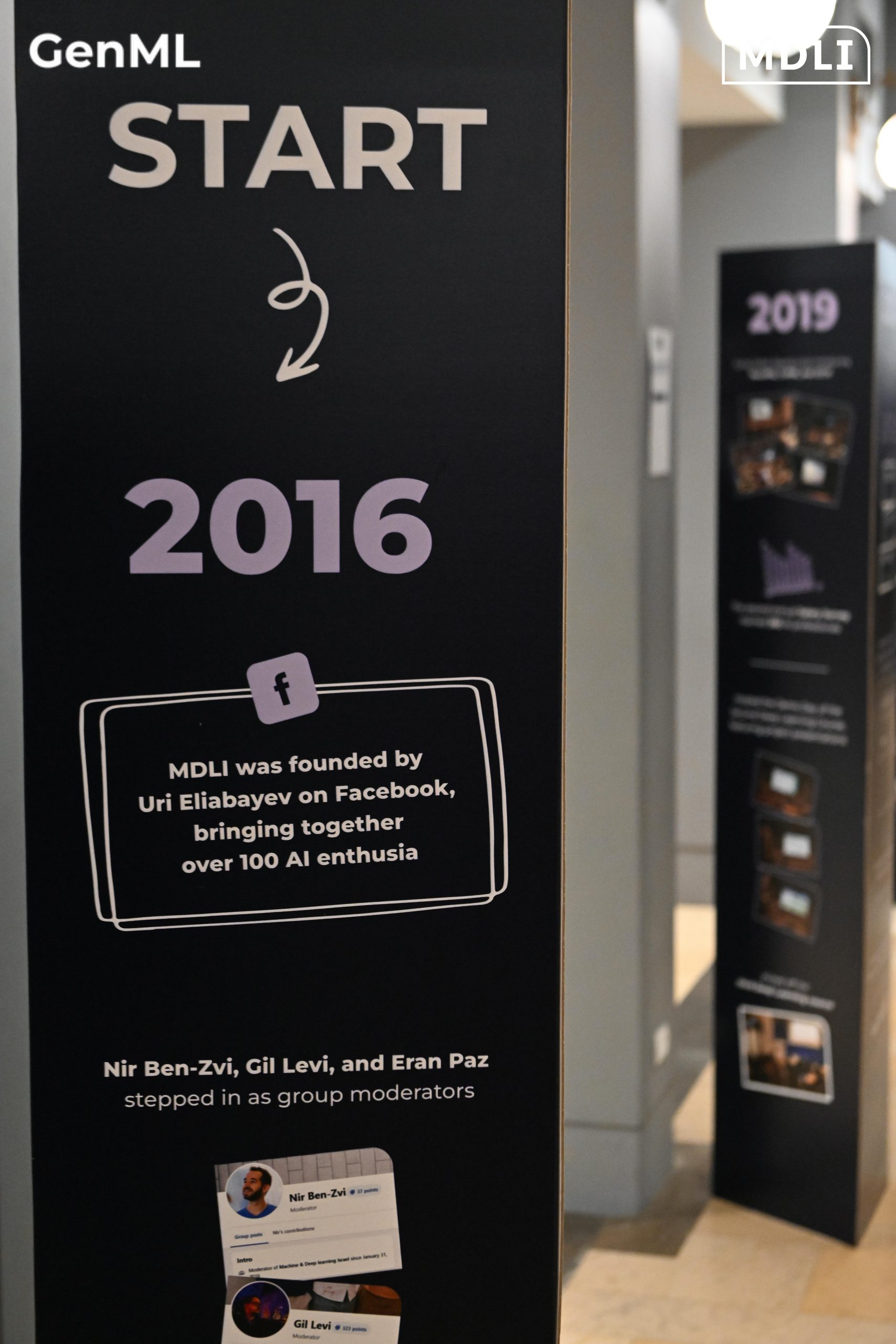

Uri Eliabayev

Founder at MDLI

9:40-10:10

Easily build Gen AI application with RAG, Agents and Evaluation, using Amazon Bedrock

Discover how Amazon Bedrock empowers you to build, evaluate, and deploy enterprise-grade generative AI applications by moving quickly from concept to production.

This session showcases a real-world example of building an Agentic application using best-in-class foundation models from Anthropic and others, leveraging powerful Bedrock building blocks for RAG, Agents, prompt flows, and Guardrails.

As a bonus, we’ll share the most exciting generative AI innovations announced at AWS re:invent 2024 (Dec 2-6).

Gili Nachum

Principal Gen AI & ML Solutions Architect at AWS

10:10-10:40

GenAI in the Wild: Protecting Your Herd from Predators

GenAI is a powerful technology that is being rapidly adopted for a plethora of use cases. However, its power also makes it a prime target for attackers looking to exploit vulnerabilities. In this talk, we will discuss the need for GenAI security and the challenges involved in defending against GenAI attacks. We will highlight the complexity of the threat landscape and the difficulties in threat detection in the context of constantly evolving use cases. Join us as we explore this unexplored and uncharted domain and discuss our efforts to break new ground at Intuit with GenOS and GenSRF.

Ido Farhi

Senior Data Scientist at Intuit

10:40-11:10

Making AI development easy with Prompt Flow

Prompt Flow is an open-source development tool designed to streamline the entire development cycle of AI applications powered by LLMs. Prompt Flow simplifies the process of prototyping, experimenting, iterating, and deploying your AI applications, and has its own VSCode extension.

In this session I'll introduce Prompt Flow and demonstrate how you can use it for building a chat flow, evaluate it and use it as an LLMOps tool.

Lior King

Sr. Cloud Solutions architect - Data & AI at Microsoft

11:10-11:30

Unlocking the potential of MLOps & LLMOps: current and future business applications

In this presentation, you'll explore how Weights & Biases are transforming the landscape of machine learning and generative AI. Key takeaways include:

- A technical overview and live demo of the Weights & Biases platform

- Insights into why leading AI teams trust Weights & Biases to scale and productionize ML and GenAI projects

- Real-world case studies showcasing the power of a seamless approach to AI-driven solutions

Yuval Shmuel

W&B presale Israel at Dorcom

11:30-11:50

Exploring Function Calling with Gemini

Traditional LLMs have limitations. They can't access up-to-date information or interact with the real world meaningfully. Function calling solves these problems. This session explores how function calling expands the capabilities of LLMs.

Sveta Morag

Cloud Solutions Architect at Google Cloud

11:50-12:20

Break

12:20-12:40

Profiling Buyer Interests Using LLM-Generated Graphs

How can we understand the core interests and passions of our buyers? How can we use this knowledge to introduce them to products they have yet to discover?

While typical recommendations often focus on items similar to those already viewed, our goal is to identify the deeper passions that drive buyers' shopping habits. This allows us to suggest related products from entirely different categories.

We use graphs generated by Large Language Models (LLMs) to represent buyers' interests. These graphs help us distinguish between different user interests and traverse the graph to identify various levels of interest granularity.

Oded Zinman

Applied Researcher at eBay

12:40-13:00

AI Agents in Practice

What are AI agents? What are LLM based agents? How do they differ from non-agentic AI products? What real-world problems do they solve? What are the essential components for developing an LLM based agent? What is the technology stack in this field? How can we evaluate LLM based agents? In this session, we will explore these questions and more. We will examine the architecture, capabilities, and practical applications – Aiming to gain a comprehensive understanding as we create agentic workflows within our organizations.

Lior Cohen

Senior Data Scientist at Nvidia

13:00-13:20

How to build GenAI agents that actually work

Talking about GenAI agents is easy, but building ones that actually work is very hard.

In this talk I will shorty introduce what GenAI agents are and will talk about the three biggest challenges in implementing them:

Accuracy and user trust, latency and cost.

I will share a general framework for building GenAI agents using different techniques including different building blocks, planning and methods of evaluation.

I will also discuss how we, at AI21 labs are using these methods to deliver agent based products that actually work

Amit Mandelbaum

Tech Lead at AI21 Labs

13:20-13:40

Near-Realtime RAG on Production Data: Bringing AI to the Data

Retrieval-augmented generation (RAG) implementations are becoming increasingly popular, and there is a growing demand for these systems to operate at near-realtime speeds and at scale.

In this session, we present our approach to meet these challenges by bringing the algorithms closer to the data. This involves integrating vector search capabilities with popular databases, enabling RAG implementations on production data.

Specifically, we discuss the necessity of vector data types and the development of advanced index solutions, such as graph-based or inverted-file vector indices, to handle large-scale data efficiently.

By incorporating these features into existing database systems, we aim to unlock the potential for generative AI applications on a grand scale. This session will explore the current state of vector search capabilities, RAG implementations, and other generative AI-related features in a leading database system, highlighting the opportunities and challenges of deploying near-realtime RAG on production data.

Boris Dahav

Data, Analytics & AI Domain Specialist at IMOD Division, Oracle

13:40-14:00

Building the Future: AI Infrastructure in the GenAI Era

Compute power and modern GPU infrastructure are at the heart of the recent GenAI revolution. Companies, research institutes and countries consider it as a strategic enabler for their future success in AI. In this talk we’ll delve into the AI Infrastructure space and share challenges and best practices relating to compute orchestration and utilization.

Ronen Dar

Co-Founder and CTO at Run:ai

14:00-15:00

Lunch Break

15:00-15:20

Is My LLM Doing a Good Job? Evaluating LLMs at Scale: Lessons Learned from Lightricks

As large language models (LLMs) become increasingly integrated into industry workflows, a pressing question arises: how do we know if our LLMs are truly delivering value across different user interactions?

This talk, drawing on lessons learned at Lightricks, explores real-world challenges in evaluating LLMs at scale, particularly for creativity-related use cases where there is no single correct answer. We’ll dive into the challenges we faced and share the evaluation framework we developed to align with specific business objectives and user experiences. Attendees will gain practical insights into measuring LLM effectiveness in dynamic, real-world environments, ensuring models are efficient and impactful for their intended use cases.

Asi Messica

VP Data Science at Lightricks

15:20-15:40

AI-Driven Meeting Prep summaries: Helping Client preparation

In this talk, I’ll present how we developed an AI-powered tool at HoneyBook that automatically generates tailored meeting summaries for members. The tool analyzes key data points, including previous meeting notes, client context, shared files, and business insights, to provide members with actionable snapshots before each meeting. This enables them to be better prepared, engage more effectively with clients, and make data-driven decisions. Attendees will learn how AI can enhance productivity through personalized, context-rich insights, and I will explore the technical implementation, challenges faced and results

Doron Bartov

Staff Data Scientist at HoneyBook

15:40-16:00

Taking AI to the smallest scale

AI and LLM are in the world on a vast scale. But you can take ML model and LLM to a tiny scale – to the IoT world! You can run models even on tiny microprocessors that cost $4. In this session, you will learn how to work with LLM and ML models on small IoT devices to create exciting and smart devices with low cost and effort

Ran Bar-Zik

Senior Software Architect at CyberArk

16:00-16:20

NumeroLogic: Number Encoding for Enhanced LLMs' Numerical Reasoning

Language models struggle with handling numerical data and performing arithmetic operations. We hypothesize that this limitation can be partially attributed to non-intuitive textual numbers representation. When a digit is read or generated by a causal language model it does not know its place value (e.g. thousands vs. hundreds) until the entire number is processed. To address this issue, we propose a simple adjustment to how numbers are represented by including the count of digits before each number. For instance, instead of "42", we suggest using "{2:42}" as the new format. This approach, which we term NumeroLogic, offers an added advantage in number generation by serving as a Chain of Thought (CoT). By requiring the model to consider the number of digits first, it enhances the reasoning process before generating the actual number. We use arithmetic tasks to demonstrate the effectiveness of the NumeroLogic formatting. We further demonstrate NumeroLogic applicability to general natural language modeling, improving language understanding performance in the MMLU benchmark.

Eli Schwartz

Research Scientist & Tech Lead at IBM Research

9:30-15:30

Building RAG Agents with LLMs

About this Course

The evolution and adoption of large language models (LLMs) have been nothing short of revolutionary, with retrieval-based systems at the forefront of this technological leap. These models are not just tools for automation; they are partners in enhancing productivity, capable of holding informed conversations by interacting with a vast array of tools and documents. This course is designed for those eager to explore the potential of these systems, focusing on practical deployment and the efficient implementation required to manage the considerable demands of both users and deep learning models. As we delve into the intricacies of LLMs, participants will gain insights into advanced orchestration techniques that include internal reasoning, dialog management, and effective tooling strategies.

Learning Objectives

The goal of the course is to teach participants how to:

- Compose an LLM system that can interact predictably with a user by leveraging internal and external reasoning components.

- Design a dialog management and document reasoning system that maintains state and coerces information into structured formats.

- Leverage embedding models for efficient similarity queries for content retrieval and dialog guardrailing.

- Implement, modularize, and evaluate a RAG agent that can answer questions about the research papers in its dataset without any fine-tuning.

By the end of this workshop, participants will have a solid understanding of RAG agents and the tools necessary to develop their own LLM applications.

Topics Covered

The workshop includes topics such as LLM Inference Interfaces, Pipeline Design with LangChain, Gradio, and LangServe, Dialog Management with Running States, Working with Documents, Embeddings for Semantic Similarity and Guardrailing, and Vector Stores for RAG Agents. Each of these sections is designed to equip participants with the knowledge and skills necessary to develop and deploy advanced LLM systems effectively.

Course Outline

- Introduction to the workshop and setting up the environment.

- Exploration of LLM inference interfaces and microservices.

- Designing LLM pipelines using LangChain, Gradio, and LangServe.

- Managing dialog states and integrating knowledge extraction.

- Strategies for working with long-form documents.

- Utilizing embeddings for semantic similarity and guardrailing.

- Implementing vector stores for efficient document retrieval.

- Evaluation, assessment, and certification.

Level: Practitioners

We offer a discount for IDF and Reserve members and their partners (Yes, you can come together). A reserve approval certificate is required for this application!

הנחה לאנשי צבא, משרתי מילואים ובני זוגם (שניכם יכולים להגיע).

יש לצרף טופס מילואים בתהליך השארת הפרטים, לאחר מילוי הטופס אנחנו נחזור אליכם עם לינק לקוד הנחה.

Amit Bleiweiss

Senior Data Scientist at NVIDIA

10:00-13:00

Generative-AI on the go, The AI revolution on your AI-PC.

Generative-AI on the go, The AI revolution on your AI-PC.

Join us for a hands-on workshop where you can visualize the latest AI algorithms and experience code building.

Spend 3 hours learning and tinkering with the state-of-the-art AI technologies on AI-PCs.

What will we learn

- What is an AI PC?

Understand the main differences between a CPU, GPU and NPU

Learn which models perform best on each type of device.

Discover how to program and maximize the potential of an AI-PC.

- Text Generation

Explore how Large Language Models (LLMs) generate text.

Create a chatbot and delve into Retrieval Augmented Generation (RAG).

- Image Generation

Learn how text-to-image technology works.

Generate stunning images or videos using stable diffusion and other methods.

- Music Generation

Can LLMs generate music?

Advanced music editing and music generation on your laptop.

Who should attend

- Enthusiasts eager to learn about AI and its applications.

- Programmers looking to expand their skill set and gain deeper understanding of AI algorithms.

- Professionals interested in integrating AI into their work.

Requirements

- Basic python programming skill

- We’ll provide everything else!

Level: Basic+Advanced

Participation in the workshop is mandatory.

We offer a discount for IDF and Reserve members and their partners (Yes, you can come together). A reserve approval certificate is required for this application!

הנחה לאנשי צבא, משרתי מילואים ובני זוגם (שניכם יכולים להגיע).

יש לצרף טופס מילואים בתהליך השארת הפרטים, לאחר מילוי הטופס אנחנו נחזור אליכם עם לינק לקוד הנחה.

Guy Tamir

Technology Evangelist at Intel